Machine Learning Pipelines: From Data Preparation to Model Deployment

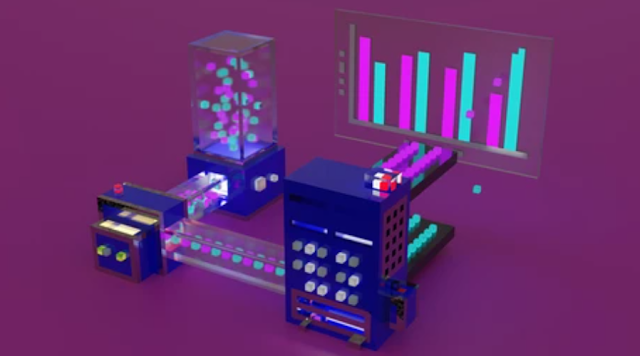

Machine learning pipelines are a systematic approach to building and deploying machine learning models. They encompass a series of steps, from data preparation and feature engineering to model training, evaluation, and deployment. The main purpose of a machine learning pipeline is to streamline the process, ensure reproducibility, and facilitate efficient model development. Here are the key stages involved in a typical machine learning pipeline:

Data Collection:

The first step in a machine learning pipeline is to collect the necessary data. This may involve gathering data from various sources, such as databases, APIs, or files. The collected data should be representative of the problem at hand and cover all relevant aspects.

Data Preprocessing and Feature Engineering:

Once the data is collected, it needs to be preprocessed and transformed into a suitable format for modeling. This includes handling missing values, addressing outliers, scaling features, encoding categorical variables, and performing feature extraction or selection. Data preprocessing and feature engineering aim to enhance the quality and relevance of the data for model training.

Model Selection and Training:

After the data is prepared, the next step is to select an appropriate machine learning algorithm or model. This selection depends on the problem type (e.g., classification, regression), the nature of the data, and the desired outcome. The selected model is then trained on the preprocessed data using a training dataset. Model training involves adjusting the model's parameters to minimize the error or maximize a performance metric, such as accuracy or mean squared error.

Model Evaluation:

Once the model is trained, it needs to be evaluated to assess its performance and generalization capabilities. This is typically done using a separate validation dataset or through techniques like cross-validation. Various performance metrics, such as accuracy, precision, recall, or mean squared error, are calculated to measure the model's effectiveness. The evaluation results help identify any issues, such as overfitting or underfitting, and guide model refinement.

Hyperparameter Tuning:

Machine learning models often have hyperparameters that need to be set before training. Hyperparameters control the behavior and complexity of the model and impact its performance. Hyperparameter tuning involves systematically exploring different combinations of hyperparameter values to find the optimal configuration that maximizes the model's performance.

Model Deployment:

After the model has been trained and evaluated, it can be deployed for production use. This involves integrating the model into a software application or system where it can receive input data and generate predictions. The deployment process may include packaging the model, creating APIs, setting up infrastructure, and monitoring the model's performance in real-time.

Model Monitoring and Maintenance:

Once the model is deployed, it is important to monitor its performance and make necessary adjustments over time. This includes monitoring for concept drift, which occurs when the distribution of the input data changes, and retraining or updating the model when necessary. Ongoing maintenance ensures that the model remains accurate and reliable as the data and business requirements evolve.

By following a machine learning pipeline, practitioners can efficiently develop, evaluate, and deploy machine learning models. The pipeline helps ensure a systematic and reproducible approach, facilitates collaboration between different stakeholders, and enables efficient model iteration and improvement. Additionally, automation tools and frameworks are available to streamline the pipeline process and make it easier to implement and manage machine learning projects.

.png)