Deep Learning: Understanding Neural Networks and Deep Neural Networks

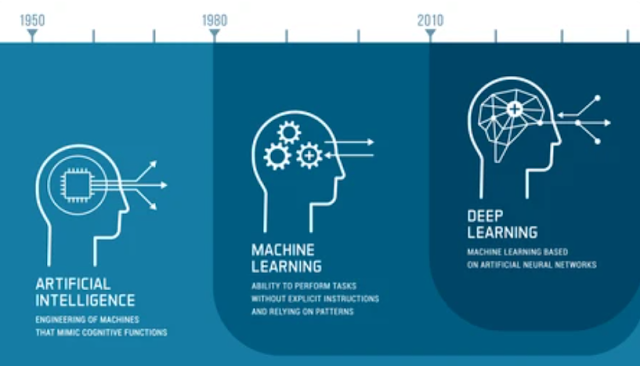

Deep learning is a subfield of machine learning that focuses on training artificial neural networks to learn and make predictions or decisions. It has gained significant attention and success in recent years due to its ability to automatically learn intricate patterns and representations from large and complex datasets. At the heart of deep learning are neural networks, specifically deep neural networks (DNNs). Here, we will explore the principles and components of neural networks and deep neural networks.

Neural Networks:

Neural networks are computational models inspired by the structure and functioning of the human brain. They consist of interconnected nodes, called artificial neurons or "units," organized into layers. Each unit receives input signals, performs computations, and produces an output signal that is passed on to the next layer. The connections between units are associated with weights, which determine the strength or importance of the connections.

Key Components of Neural Networks:

- Input Layer: The input layer receives the input data, which can be numerical values, images, or text.

- Hidden Layers: Neural networks typically consist of one or more hidden layers between the input and output layers. These layers perform computations and transform the input into more meaningful representations.

- Output Layer: The output layer produces the final prediction or decision based on the transformed input.

- Activation Functions: Activation functions introduce non-linearities into the network, allowing it to learn complex relationships between the inputs and outputs of each unit. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and softmax.

Deep Neural Networks (DNNs):

Deep neural networks are neural networks with multiple hidden layers. The depth refers to the number of hidden layers in the network. DNNs can learn hierarchical representations of the data by progressively extracting more abstract features in each layer. With increasing depth, DNNs can capture complex patterns and relationships in the data.

Training Deep Neural Networks:

Training DNNs involves the following steps:

Forward Propagation: The input data is fed into the network, and computations are performed layer by layer to produce an output.

Loss Function: A loss function measures the difference between the predicted output and the true output. It quantifies the network's performance.

Backpropagation: Backpropagation is used to update the weights of the connections in the network based on the calculated loss. The gradients of the loss function with respect to the network parameters are computed and used to adjust the weights using optimization algorithms like gradient descent.

Iterative Training: The forward propagation, loss computation, and backpropagation steps are repeated iteratively on batches of training data until the network learns the patterns and minimizes the loss.

Applications of Deep Learning:

Deep learning has been successful in various domains, including:

- Computer Vision: Image recognition, object detection, and image generation.

- Natural Language Processing: Language translation, sentiment analysis, and text generation.

- Speech Recognition: Speech-to-text conversion and voice assistants.

- Recommender Systems: Personalized recommendations based on user behavior and preferences.

- Healthcare: Disease diagnosis, medical image analysis, and drug discovery.

- Autonomous Vehicles: Perception, decision-making, and control.

Deep learning's strength lies in its ability to automatically learn hierarchical representations of data, eliminating the need for manual feature engineering. However, training deep neural networks requires large amounts of labeled data and computational resources. Ongoing research focuses on developing more efficient algorithms, addressing ethical concerns, and expanding the capabilities of deep learning in real-world applications.